A new artificial intelligence (AI) tool can classify chemical reaction mechanisms using concentration data to make 99.6% accurate predictions with realistically noisy data. Igor Larrosa and Jordi Bures of the University of Manchester have made the model freely available to help advance “the discovery and development of fully automated organic reactions”.

“There is a lot more information in the kinetic data than chemists have traditionally been able to extract,” comments Larrosa. The deep learning model “not only equals but exceeds what expert kinetic chemists would be able to do with previous tools,” he says.

Larrosa adds that chemistry is at a unique turning point for AI tools. As such, Manchester chemists sought to design a model with the ideal capabilities for classifying reactions. Bures and Larrosa combined two different neural networks. First, a short-term memory neural network tracks changes in concentration over time. Second, a fully connected neural network processes what comes out of this first network.

The final model contains 576,000 trainable parameters. Parameters describe “the mathematical operations that are performed on the kinetic profile data,” says Larrosa. These operations then produce probabilities for which the mechanism comes from the data. “For comparison, AlphaFold uses 21 million parameters and GPT3 uses 175 billion parameters,” he adds.

Catalyst Information

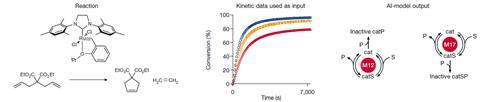

Bures and Larrosa trained the model with 5 million simulated kinetic samples, labeled with one of 20 common catalytic reaction mechanisms to which the sample relates. Once the model has learned to recognize the characteristics of the kinetic data associated with each reaction mechanism, it “applies these rules to the new input kinetic data to classify them,” says Bures. The first of the 20 is the simplest catalytic mechanism, described by the Michaelis-Menten model. Bures and Larrosa group the rest into mechanisms involving bicatalytic steps, those with catalyst activation steps and those with catalyst deactivation steps, the latter being the largest group.

Simulated data is necessary for high classification performance, adds Bures, because experimental data is inevitably noisy and difficult to interpret. “The chemist’s experimental data and corresponding conclusions should not be used for training, because the resulting model would be, at best, as accurate as an average chemist, and more likely less accurate,” he says.

To test the trained model, Bures and Larrosa used more simulated data, which caused only 38 misclassifications in 100,000 samples. To more closely simulate real-world experiments, chemists added noise to the data. This reduced accuracy to 99.6% with realistic noise levels and 83% with what Larrosa calls “the absurd extreme of noisy data”.

The chemists also applied the model to data from previously published experiments. “Although the correct answer to these questions cannot be known, the model proposed chemically sound mechanisms,” says Larrosa. The results also provided new insights into how catalysts for reactions, including ring-closing metathesis and cycloadditions, break down. “Understanding catalyst decomposition pathways is extremely important in order to be able to create reproducible processes,” emphasizes Larrosa.

Marwin Segler of Microsoft Research AI4Science calls the work “a fantastic demonstration of how machine learning can help creative scientists unravel nature and solve difficult chemical problems.” “We need better tools like this to discover new reactions to make new drugs and materials and to make chemistry greener,” he says. “It also highlights how powerful simulations can be for training AI algorithms, and we can expect to see more of that.”